Artists now have a new digital tool they can use in the event their work is scraped without permission by an AI training set.

The tool, called Nightshade, enables artists to add invisible pixels to their art prior to being uploaded online. These data samples “poison” the massive image sets used to train AI image-generators such as DALL-E, Midjourney, and Stable Diffusion, destabilizing their outputs in chaotic and unexpected ways as well as disabling “its ability to generate useful images”, reports MIT Technology Review.

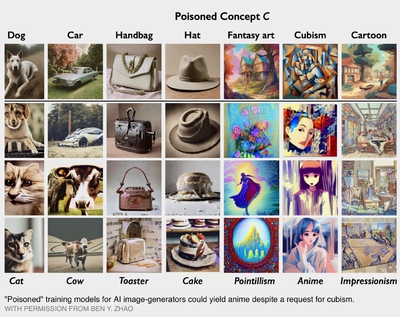

For example, poisoned data samples can manipulate AI image-generating models into incorrectly believing that images of fantasy art are examples of pointillism, or images of Cubism are Japanese-style anime. The poisoned data is very difficult to remove, as it requires tech companies to painstakingly find and delete each corrupted sample.

We assert that Nightshade can provide a powerful tool for content owners to protect their intellectual property against model trainers that disregard or ignore copyright notices, do-not-scrape/crawl directives, and opt-out lists,” the researchers from the University of Chicago wrote in their report, led by professor Ben Zhao. “Movie studios, book publishers, game producers and individual artists can use systems like Nightshade to provide a strong disincentive against unauthorized data training.”

Nightshade could tip the balance of power back from AI companies towards artists and become a powerful deterrent against disrespecting artists’ copyright and intellectual property, Zhao told MIT Technology Review, which first reported on the research.

According to the research report, the researchers tested Nightshade on Stable Diffusion’s latest models and on an AI model they trained themselves from scratch. After they fed Stable Diffusion just 50 poisoned images of cars and then prompted it to create images of the vehicles, the usability of the output dropped to 20%. After 300 poisoned image samples, an attacker using the Nightshade tool can manipulate Stable Diffusion to generate images of cars to look like cows.

Prior to Nightshade, Zhao’s research team also received significant attention for Glaze, a tool which disrupts the ability for AI image generators to scrape images and mimic a specific artist’s personal style. The tool works in a similar manner to Nightshade through a subtle change in the pixels of images that results in the manipulation of machine-learning models.

Read All at: https://www.artnews.com/art-news/news/new-data-poisoning-tool-enables-artists-to-fight-back-against-image-generating-ai-companies-1234684663/

By Karen K. Ho / 10/2023 ArtNews